Introduction

Scaling is a fundamental feature of Kubernetes that enables applications to handle increased workloads by adding more pods or reducing them when the demand decreases. In this article, we’ll focus on scaling stateless applications running in Kubernetes, discuss best practices, explore different scaling strategies, and provide example code snippets with real-world use cases.

Table of Contents

- What is a Stateless Application?

- Why Scale Stateless Applications?

- Types of Scaling in Kubernetes

- Kubernetes Horizontal Pod Autoscaler (HPA)

- Manual Scaling with kubectl

- Example Scenario: Scaling a Node.js Stateless App

- Architecture Diagram for Scalable Stateless Applications

- Best Practices for Scaling

- Conclusion

1. What is a Stateless Application?

A stateless application does not store any client session data on the server. Instead, session data is stored on the client-side or in a shared storage system. Stateless applications are easier to scale because they don’t require consistency in state across multiple pods.

Examples of Stateless Applications:

- REST APIs

- Microservices

- Static websites

- Load balancers

2. Why Scale Stateless Applications?

Scaling stateless applications allows you to:

- Handle high traffic during peak times.

- Optimize costs by reducing pods during low traffic.

- Improve fault tolerance by running multiple instances across nodes.

3. Types of Scaling in Kubernetes

Kubernetes offers two types of scaling for pods:

- Manual Scaling

- Use

kubectl scaleto adjust the number of pods manually.

- Use

- Automatic Scaling

- Kubernetes provides Horizontal Pod Autoscaler (HPA) to adjust the number of pods based on CPU, memory usage, or custom metrics.

4. Kubernetes Horizontal Pod Autoscaler (HPA)

The Horizontal Pod Autoscaler (HPA) automatically adjusts the number of pods in a deployment based on observed CPU utilization or other application-specific metrics.

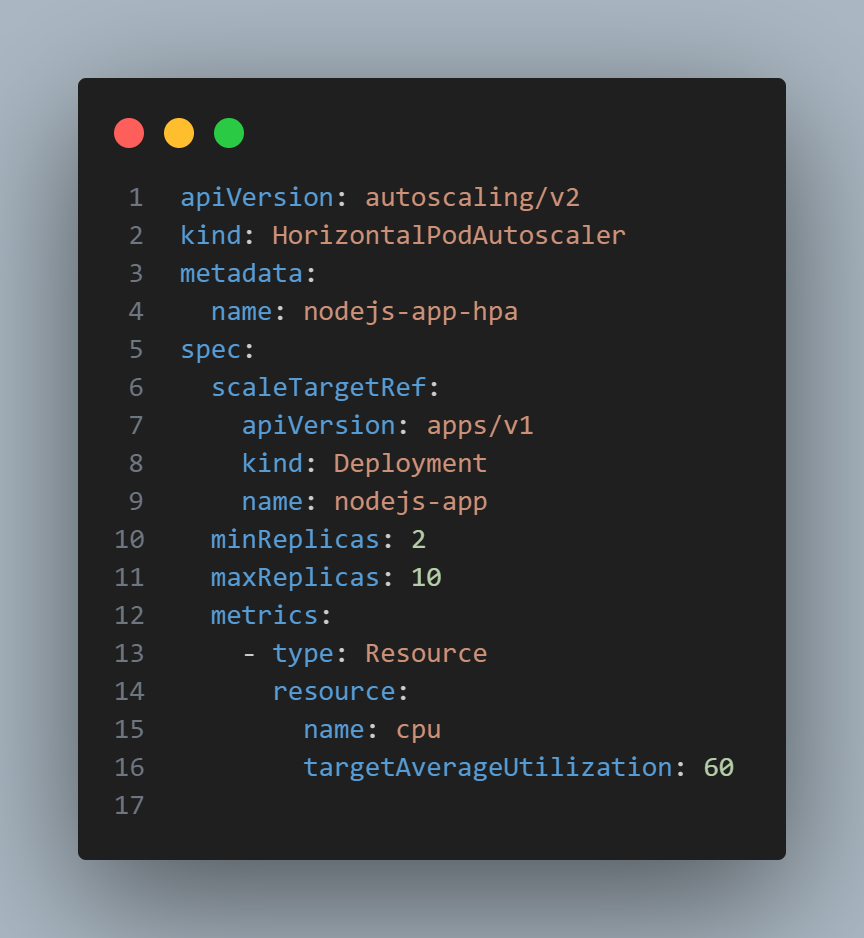

Example YAML Configuration for HPA

apiVersion: autoscaling/v2

kind: HorizontalPodAutoscaler

metadata:

name: my-app-hpa

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: my-app

minReplicas: 2

maxReplicas: 10

metrics:

- type: Resource

resource:

name: cpu

targetAverageUtilization: 50

5. Manual Scaling with kubectl

You can manually scale the number of pods using the following command:

kubectl scale deployment my-app --replicas=5To verify the number of pods:

kubectl get podsExample Scenario: Scaling a Node.js Stateless App

Step 1: Create a Node.js Application

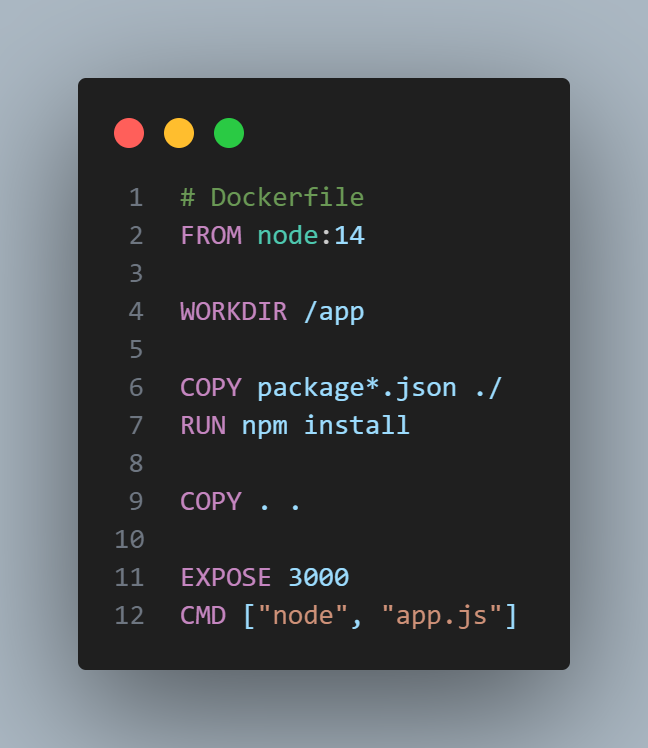

Step 2: Create a Dockerfile

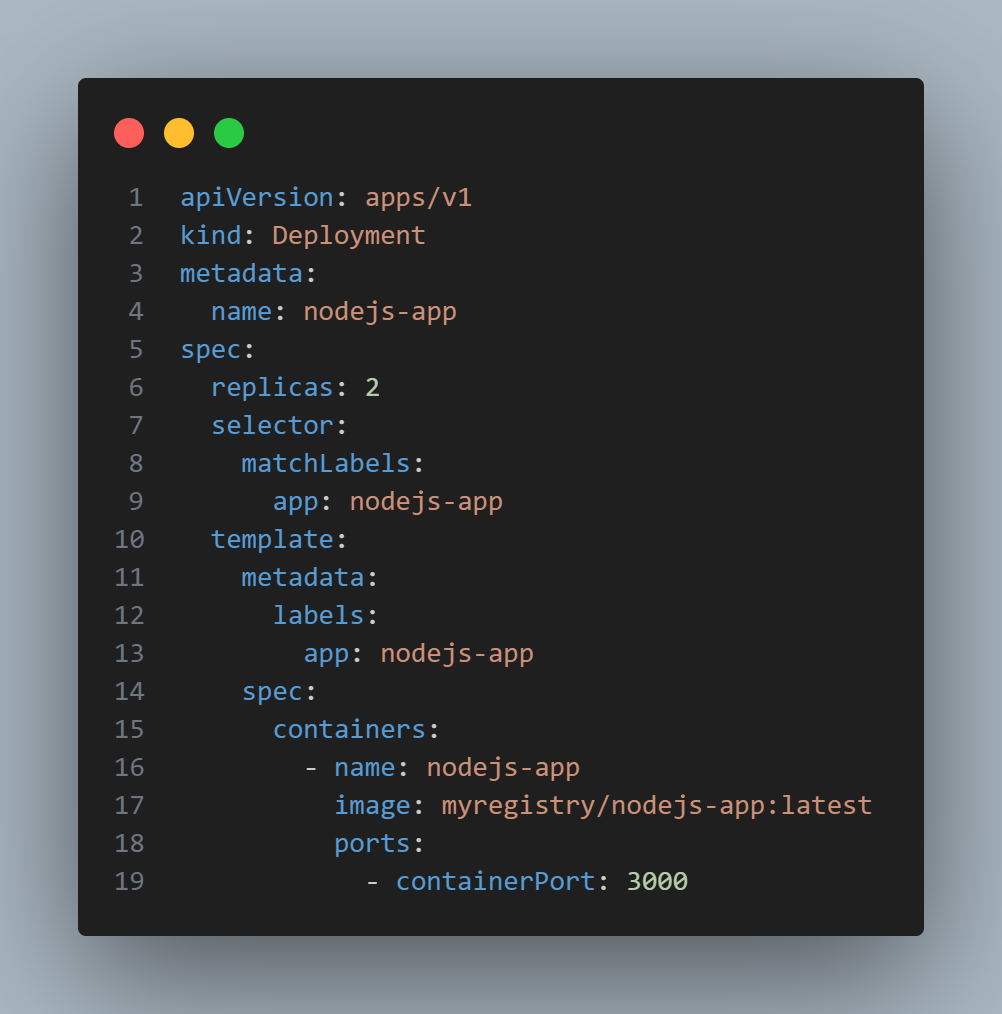

Step 3: Create a Kubernetes Deployment YAML

Step 4: Deploy to Kubernetes

kubectl apply -f deployment.yamlStep 5: Configure HPA

Apply the HPA:

kubectl apply -f hpa.yaml7. Architecture Diagram for Scalable Stateless Applications

Architecture Overview:

- Users send requests to the Load Balancer (Ingress).

- The load balancer routes traffic to multiple Pods.

- Pods are automatically scaled by the Horizontal Pod Autoscaler based on metrics.

- All pods connect to a shared database or message broker for stateful data.

8. Best Practices for Scaling Stateless Applications

- Use Readiness Probes: Ensure only healthy pods receive traffic.

- Set Resource Requests and Limits: Prevent resource contention by specifying CPU and memory limits.

- Use External Storage: Store state in external databases, message brokers, or cloud storage.

- Monitor Metrics: Use tools like Prometheus and Grafana to monitor application performance.

- Test Scaling: Regularly test autoscaling behavior under simulated load.

9. Conclusion

Scaling stateless applications in Kubernetes ensures your system remains resilient and performant during traffic spikes. By using tools like the Horizontal Pod Autoscaler, you can dynamically adjust the number of pods based on real-time metrics, improving efficiency and reliability.

💡 Want more Kubernetes tips? Subscribe to our newsletter for in-depth tutorials and best practices!